Benchmarking Autonomous Scattering Experiments Illustrated on TAS

- 1Jülich Centre for Neutron Science (JCNS) at Heinz Maier-Leibnitz Zentrum (MLZ), Forschungszentrum Jülich GmbH, Garching, Germany

- 2Center for Advanced Mathematics for Energy Research Applications (CAMERA), Lawrence Berkeley National Laboratory, Berkeley, CA, United States

- 3Institut Laue-Langevin, Grenoble, France

With the advancement of artificial intelligence and machine learning methods, autonomous approaches are recognized to have great potential for performing more efficient scattering experiments. In our view, it is crucial for such approaches to provide thorough evidence about respective performance improvements in order to increase acceptance within a scientific community. Therefore, we propose a benchmarking procedure designed as a cost-benefit analysis that is applicable to any scattering method sequentially collecting data during an experiment. For a given approach, the performance assessment is based on how much benefit, given a certain cost budget, it is able to acquire in predefined test cases. Different approaches thus get a chance for comparison and can make their advantages explicit and visible. Key components of the procedure, i.e., cost measures, benefit measures, and test cases, are made precise for the setting of three-axes spectrometry (TAS) as an illustration. Finally, we discuss neglected aspects and possible extensions for the TAS setting and comment on the procedure’s applicability to other scattering methods. A Python implementation of the procedure to simplify its utilization by interested researchers from the field is also provided.

1 Introduction

Scattering experiments have so far been carried out in a manual or semi-automated way, i.e., experimenters had to organize the measuring process and determine what and where to measure (next). With the rise of artificial intelligence and machine learning techniques, it is natural to ask whether there are autonomous approaches that allow performing experiments in a more efficient way. In other words, it is worthwhile to see if, for a fixed cost budget like experimental time available, autonomous approaches can perform “better” experiments. In the following, by “autonomous approach” and related phrases, we refer to a decision-making algorithm that is combined with an automated communication and analysis infrastructure to create a closed loop with an instrument control system and thus is, after initialization, able to conduct measurements without human intervention.

Indeed, there are already autonomous approaches that have recently been developed for scattering experiments (Noack et al., 2020; Durant et al., 2021a; Durant et al., 2021b; Maffettone et al., 2021; Noack et al., 2021; Teixeira Parente et al., 2021). A way to compare and assess the performance of different approaches (manual as well as autonomous approaches) is, however, currently lacking. From our perspective, benchmarking and measuring performance is of the utmost importance at this stage for the establishment and progress of autonomous approaches in the field of materials analysis by scattering methods. As examples, similar efforts already developed a benchmark for oxygen evolution reaction catalyst discovery (Rohr et al., 2020) or evaluated the performance of Bayesian optimization (Frazier and Wang, 2016) across several materials science domains (Liang et al., 2021).

In this work, we propose a benchmarking procedure which is designed as a cost-benefit analysis and can be applied to any scattering method sequentially collecting data of a certain quantity of interest during an experiment. Key components of the procedure that mainly drive the performance assessment are cost measures, benefit measures, and test cases. Cost measures specify the type of cost that is to be minimized and benefit measures characterize how “success” is defined. Test cases describe particular scenarios depending on the scattering method and determine which aspects the approaches are tested on. We emphasize that benchmarking processes in the general field of machine learning are potentially fragile (Dehghani et al., 2021) and therefore need to be defined carefully. After the general formulation of the procedure, all of the mentioned components are made precise for the setting of three-axes spectrometry (TAS).

TAS is an established technique for materials analysis by inelastic neutron scattering (Shirane et al., 2002). For decades, three-axes spectrometers have measured the dynamic properties of solids, e.g., phonons and magnetic excitations, for a wide range in both energy transfer (E) and momentum (Q) space and provide the opportunity to detect weak signals with high resolution. Compared to techniques like time-of-flight spectroscopy (TOF), profiting from large detector assemblies, the sequential, point-by-point, measurements in TAS experiments come with the cost of moving the three instrument axes—around a monochromator, a sample, and an analyzer—individually in real space, which is rather slow (seconds to minutes). Furthermore, instead of operating classically with a single detector, recent developments attempt to use multi-detectors for higher data acquisition speed at the expense of reduced measuring flexibility (Kempa et al., 2006; Lim et al., 2015; Groitl et al., 2016; Groitl et al., 2017). However, as a first step, we concentrate on classical TAS experiments with a single detector here.

Semi-automated TAS experiments are often systematically organized on a grid in Q-E space with predefined steps. This procedure is frequently inefficient as it collects a substantial amount of measurement points in the background, i.e., areas with erroneous signals most often coming from undesired scattering events inside the sample itself, the sample environment, or components of the instrument. If autonomous approaches, however, were able to collect information on intensities in signal regions and their shape faster, they could enable a more efficient (manual or autonomous) assessment of the investigated system’s behaviour.

The manuscript is divided into sections as follows. Section 2 gives fundamental definitions of the key components. The benchmarking procedure is specified in Section 3 and Section 4 contains an illustration of the key components in a TAS setting. Finally, we provide a discussion in Section 5 and a conclusion in Section 6.

2 Definitions

This section provides definitions for the central components of the benchmarking procedure (Section 3), i.e., for test cases, experiments, cost measures, and benefit measures. For TAS experiments, these notions are made precise in Section 4.

Definition (Test case). A test case t is a collection of details necessary to conduct a certain scattering experiment.

Here, a scattering experiment is viewed as a sequential collection of data points the meaning of which depends on the particular scattering method. Recall that an N-tuple, N ∈ N, is a finite ordered list of N elements.

Definition (t-Experiment). Let t be a test case. A t-experiment

where zj denote data points and

In order to measure costs and benefits of experiments in the context of a certain test case, we need a formalization of cost and benefit measures.

Definition (Cost/Benefit measure). Let t be a test case. Both, a cost measure c = ct and a benefit measure μ = μt, are real-valued functions of t-experiments

3 Benchmarking Procedure

In this section, we use the notions from Section 2 to formulate the main outcome of this manuscript and suggest a step-by-step procedure for benchmarking scattering experiments. The result of a benchmark is a collection of sequences with benefit values (cf. Table 1) allowing to evaluate the performance of experiments in the context of predefined test cases.

TABLE 1. Result of a benchmark. Each row, representing an experiment

Benchmarking procedure:

1) Specify L ∈ N test cases t(ℓ), ℓ = 1, … , L.

2) Specify a cost measure c and define

3) Specify a benefit measure μ and define

For each test case t(ℓ), perform the following steps:

4) Specify M(ℓ) ∈ N ascending “milestone values”

5) Conduct a t(ℓ)-experiment

6) For

be the collection of the first J data points in

be the maximum number of first data points in

Then, for each milestone value

Summarizing, a benchmark requires the specification of test cases, a cost measure, a benefit measure, and a sequence of ascending milestone values for each test case. The result is a collection of sequences with benefit values (cf. Table 1) that can be achieved using the milestone values as cost budgets.

Using their results, different approaches can now be compared by, for example, plotting the function

for each test case t(ℓ) and each approach. In Section 4.4 and Figure 1 provides a demonstration for a particular test case from the TAS context. Also, in the context of the specified cost and benefit measure, the results could allow to make strong claims of the following kind:

“Approach A lead to better experiments in p% of all test cases compared to approaches B, C, etc.”

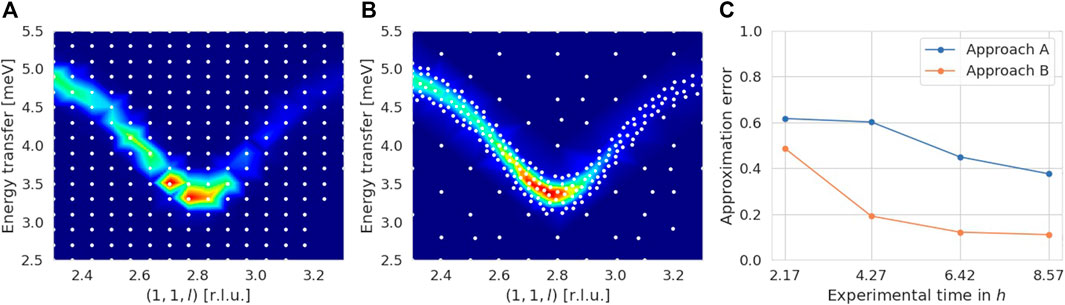

FIGURE 1. Example for an outcome of our benchmarking procedure. Two approaches [in (A) and (B)] perform an experiment in the context of a test case including an intensity function of a phonon defined on

4 Illustration on TAS

In this section, the general formulation of the benchmarking procedure and its components is made precise for TAS experiments.

4.1 TAS Setting

In TAS experiments, the concept of a three-dimensional reciprocal space Q is essential. For a given sample (or material), an element of Q is denoted by q = (h,k,l)⊤. An element of the energy transfer space E is denoted by ω. Furthermore, we call (q,ω)⊤ ∈ Rr, r = 4, the Q-E variables. For details, we refer to Shirane et al. (2002).

Since TAS experiments are carried out only along n ≤ r, n ∈ N, predefined directions in Q-E space, we introduce experiment variables

for a full rank matrix W ∈ Rr×n and an offset b ∈ Rr. It follows that

for (q,ω)⊤ ∈ Rr. Note that T−1(T(x)) = x for each x ∈ Rn, but T(T−1 (q, ω)) = (q,ω)⊤ only for (q,ω)⊤ ∈ T(Rn).

Each experiment variable xk only ranges between respective limits of investigation

The corresponding domain of interest

The representation of a TAS instrument is divided into two structures, an instrument configuration and an instrument.

Definition (Instrument configuration). The tuple

for a scan mode sm ∈ {”constant ki”, ”constant kf”}, a scan constant (ki or kf) sc > 0, and a vector of scattering senses

To measure intensities at a certain point in Q-E space, a TAS instrument needs to steer its axes to six related angles. However, it is enough to regard only a subset of four angles due to dependencies.

Definition (Instrument). The tuple

for a vector of angular velocities

The lattice parameters of the monochromator and the analyzer are necessary for a well-defined translation of points in Q-E space to associated angles of instrument axes. The so-called angle map

is induced by a sample, its orientation, and an instrument Ins and its configuration Π. Note that angular velocities vk are related to angles Ψk(q, ω). The domain dom(Ψ) ⊆ Q × E denotes the set of points in Q-E space for which Ψ is well-defined, i.e., points that are reachable by the instrument Ins. Furthermore, we define

as the set of points in

is well-defined for

For a given configuration Π and instrument Ins, we can specify a resolution function (Shirane et al., 2002)

i.e., each point in

A sample and its orientation induce a so-called scattering function

Given a resolution function φ, we get an associated intensity function

For benchmarking, we assume that i can be exactly evaluated. Of course, this is not possible in real experiments due to background and statistical noise.

For our suggestion of a benefit measure (Section 4.3), we additionally need an intensity threshold τ > 0.

The experimental time is defined as the sum of the cumulative counting time at measurement points in Q-E space and the cumulative time for moving the instrument axes. For simplicity, we assume here that the single counting time, i.e., the counting time at a single measurement point, denoted by Tcount ≥ 0, is constant for each point.

Now, we can specify the notion of a TAS test case and a TAS experiment.

Definition (TAS test case). A tuple

for a sample and its orientation, an affine transformation induced by a full rank matrix W ∈ Rr×n and an offset b ∈ Rr (Eq. 8), a domain of interest

Note that a TAS test case t induces an intensity function

In the context of a certain test case, a TAS experiment is defined as a collection of intensities i(x) at locations

Definition (TAS t-Experiment). Let t be a TAS test case. A TAS t-experiment

where

4.2 Cost Measure

We need to align our proposition of a cost measure with the limited experimental time, which is the critical quantity in a TAS experiment. Recall that the experimental time is defined as the sum of the cumulative counting time and the cumulative time for axes movement (Section 4.1).

For the cumulative counting time, we define

where Tcount ≥ 0 denotes the constant single counting time.

The cost measure representing the cumulative time for moving the instrument axes is defined as

for a metric

where

The measures ccount and caxes can be used either individually or additively to form a cost measure representing the entire experimental time. For the latter, we finally define

In our opinion, this cost measure is the most suitable to reflect time costs in a general TAS experiment.

4.3 Benefit Measure

We propose a benefit measure that measures a type of weighted L2 approximation error between a benchmark intensity function i = it and an approximation

Let us define

where ‖⋅‖ is a norm that enables to control the error measurement. Note that the notion of a “benefit” refers to an “error” in this case, i.e., reducing the benefit measure μ = μt leads to an increase in benefit.

A suitable error norm ‖⋅‖ needs to reflect that a TAS experimenter is more interested in regions of signal than in the background. This suggests that we use i itself in a suitable definition. However, an important constraint is that signal regions with different intensities are weighted equally since they might be equally interesting. For this, we use the intensity threshold τ > 0 from the TAS test case t (Eq. 21) and define

for

is a probability density function and can be used for weighting. Finally, we set

where

for a function h and a density function ρ. Note that

4.4 Test Cases

A useful set of test cases represents the variety of different scenarios that can occur in a TAS experiment. It is the set of intensity functions that is particularly important here. Therefore, we suggest to create test cases such that the corresponding induced intensity functions are composed of one or more of the following structures known in the field:

• non-dispersive structures (e.g., crystal field excitations),

• dispersive structures (e.g., spin waves or acoustic phonons),

• (pseudo-)continua (e.g., spinons or excitations in frustrated magnets).

Particular intensity functions can be created by mathematical expressions, physical simulations, or experimental data.

As an example for an outcome of our benchmarking procedure, Figure 1 displays a comparative benchmark result of two approaches for a test case including an intensity function that reflects a soft mode of the ferroelectric phase transition at ∼ 60 K of a transverse optical phonon measured on SnTe (Weber and Heid, 2021).

This figure contains exemplifications of each benchmark component: experimental time (x-axis in Figure 1C) as cost measure (Section 4.2), approximation error (y-axis in Figure 1C) as benefit measure (Section 4.3), a phonon-type intensity function (Figures 1A,B) as part of the test case (Section 4.4), and milestone values (ticks on x-axis in Figure 1C).

5 Discussion

In this section, we discuss some open aspects of the TAS setting from Section 4 and the applicability of the benchmarking procedure from Section 3 to other scattering methods. Finally, we refer to a repository containing a software implementation.

5.1 Neglected Aspects and Possible Extensions for TAS

The TAS setting does not comprise each detail occurring in a real experiment. Indeed, we neglected some aspects which we, however, see as acceptable deviations.

Certainly the most prominent neglected aspect is background and statistical noise. We find it difficult to include them in the benchmarking procedure due to their non-deterministic nature since a comparison of approaches needs to be done in a deterministic setting. The benefit measure from Section 4.3, for instance, makes use of a “true” benchmark intensity function which would not be available in the presence of background or statistical noise.

We assumed that the single counting time is constant for each measurement point. However, since single counting times are determined by the physics of the sample and the requested statistics in real experiments, they might vary at different measurement locations. In our opinion, this variation is negligible in a first step, but can be taken into account in more complex benchmarking setups if desired.

Next, the cost measures from Section 4.2 are rather general and can be applied to any TAS instrument. They can, however, be arbitrarily extended by more details if benchmarking is to be done in the context of a specific instrument. For example, real TAS instruments may differ in more [PANDA (Schneidewind and Čermák, 2015)] or less [ThALES (Boehm et al., 2015)] time-consuming procedures for moving ki values.

Furthermore, the intensity functions induced by the test cases described in Section 4.4 are derived in the context of fixed environmental parameters of the sample (such as temperature, external magnetic or electric field, etc.). Future work might extend the four-dimensional Q-E space with these parameters, i.e., r > 4, to allow for more complex intensity functions that would, however, require adjusted cost measures.

Finally, to compute all benefit values for Table 1, the benchmarking procedure requires an approach to perform an experiment that is large enough (Eq. 3). Hence, an autonomous stopping criterion is not tested although we consider it a crucial part of a fully autonomous approach.

5.2 Applicability to Other Scattering Methods

The benchmarking procedure is formulated in a modular way and depends only on abstract components like cost measures, benefit measures, and test cases. For TAS experiments, we specified these components in Section 4, but feel that the overall procedure is also applicable for scattering methods other than TAS such as diffraction, reflectivity, SAS/GISAS, or TOF. Indeed, experimenters for each of these methods measure costs and benefits in their own way and investigate different kinds of intensity functions.

Diffraction experiments (Sivia, 2011), for example, may have a setting similar to TAS experiments as the experimenter is interested in intensities defined over Q variables and many diffractometers need to move their components (sample, detector). Therefore, the cost and benefit measures presented above might also be useful in this context. The set of test cases, however, would need to be composed differently since regions of signal are mainly separated and small in shape. Also, additional aspects (such as shadowing, overlapping reflections, missing knowledge of symmetry, etc.) are to be taken into account.

5.3 Data Repository

Since the benchmarking procedure has algorithmic structure, we decided to provide an implementation in the form of Python code that computes sequences of benefit values for given cost and benefit measures (cf. Table 1). Also, benchmark components for the TAS setting are already implemented. The repository along with instructions on how to run the code is publicly available (Teixeira Parente and Brandl, 2021). It also contains descriptions of test cases that can be complemented in the future.

6 Conclusion

In this manuscript, we have developed a benchmarking procedure for scattering experiments which is designed as a cost-benefit analysis and based on key components like cost measures, benefit measures, and test cases.

Although we have provided first suggestions for all these components in a TAS setting, the process of finding a suitable benchmark setting for the scattering community in general as well as the TAS community in particular is certainly not finished.

As an outlook for the TAS community, a useful next step could be the inclusion of non-constant single counting times since it has the potential of further savings of experimental time that we do not account for in the current setting. Also, extending the Q-E variables with environmental parameters that were assumed to be fixed would lead to a more comprehensive setting. Finally, we see the contribution to the dynamical set of test cases as another future task for the community.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://jugit.fz-juelich.de/ainx/base.

Author Contributions

MG and MTP contributed to the conception and design of the study. All authors took over the implementation of the study. MTP wrote the first draft of the manuscript. CF, MG, MN, AS, and MTP wrote paragraphs of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version. GB and MTP wrote source code for implementing the benchmarking procedure.

Funding

MG and MTP received support through the project Artificial Intelligence for Neutron and X-Ray Scattering (AINX) funded by the Helmholtz AI unit of the German Helmholtz Association. MN is funded through the Center for Advanced Mathematics for Energy Research Applications (CAMERA), which is jointly funded by the Advanced Scientific Computing Research (ASCR) and Basic Energy Sciences (BES) within the Department of Energy’s Office of Science, under Contract No. DE-AC02-05CH11231.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We thank Frank Weber and Rolf Heid (both Institute for Quantum Materials and Technologies, Karlsruhe Institute of Technology) for providing scattering data for Figure 1. Also, we acknowledge discussions with Thomas Kluge (Helmholtz-Zentrum Dresden-Rossendorf).

References

Boehm, M., Steffens, P., Kulda, J., Klicpera, M., Roux, S., Courtois, P., et al. (2015). ThALES – Three Axis Low Energy Spectroscopy for Highly Correlated Electron Systems. Neutron News 26, 18–21. doi:10.1080/10448632.2015.1057050

Dehghani, M., Tay, Y., Gritsenko, A. A., Zhao, Z., Houlsby, N., Diaz, F., et al. (2021). The Benchmark Lottery. arXiv. arXiv preprint arXiv:2107.07002.

Durant, J. H., Wilkins, L., Butler, K., and Cooper, J. F. K. (2021). Determining the Maximum Information Gain and Optimizing Experimental Design in Neutron Reflectometry Using the Fisher Information. J. Appl. Cryst. 54, 1100–1110. doi:10.1107/S160057672100563X

Durant, J. H., Wilkins, L., and Cooper, J. F. (2021). Optimising Experimental Design in Neutron Reflectometry. arXiv. arXiv preprint arXiv:2108.05605.

Encyclopedia of Mathematics (1999). Metric. Encyclopedia of Mathematics. Available at: https://encyclopediaofmath.org/wiki/Metric (Accessed 06, 202130).

Frazier, P. I., and Wang, J. (2016). Bayesian Optimization for Materials designInformation Science for Materials Discovery and Design. New York, United States: Springer, 45–75. doi:10.1007/978-3-319-23871-5_3

Groitl, F., Graf, D., Birk, J. O., Markó, M., Bartkowiak, M., Filges, U., et al. (2016). CAMEA—A Novel Multiplexing Analyzer for Neutron Spectroscopy. Rev. Scientific Instr. 87, 035109. doi:10.1063/1.4943208

Groitl, F., Toft-Petersen, R., Quintero-Castro, D. L., Meng, S., Lu, Z., Huesges, Z., et al. (2017). MultiFLEXX - the New Multi-Analyzer at the Cold Triple-axis Spectrometer FLEXX. Scientific Rep. 7, 1–12. doi:10.1038/s41598-017-14046-z

Kempa, M., Janousova, B., Saroun, J., Flores, P., Boehm, M., Demmel, F., et al. (2006). The FlatCone Multianalyzer Setup for ILL’s Three-axis Spectrometers. Physica B: Condensed Matter 385, 1080–1082. doi:10.1016/j.physb.2006.05.371

Liang, Q., Gongora, A. E., Ren, Z., Tiihonen, A., Liu, Z., Sun, S., et al. (2021). Benchmarking the Performance of Bayesian Optimization across Multiple Experimental Materials Science Domains. npj Comput. Mater. 7, 188. doi:10.1038/s41524-021-00656-9

Lim, J., Siemensmeyer, K., Čermák, P., Lake, B., Schneidewind, A., and Inosov, D. (2015). BAMBUS: a New Inelastic Multiplexed Neutron Spectrometer for PANDA. J. Phys. Conf. Ser. 592, 012145. doi:10.1088/1742-6596/592/1/012145

Maffettone, P. M., Banko, L., Cui, P., Lysogorskiy, Y., Little, M. A., Olds, D., et al. (2021). Crystallography Companion Agent for High-Throughput Materials Discovery. Nat. Comput. Sci. 1, 290–297. doi:10.1038/s43588-021-00059-2

Noack, M. M., Doerk, G. S., Li, R., Streit, J. K., Vaia, R. A., and Yager, K. G. (2020). Autonomous Materials Discovery Driven by Gaussian Process Regression with Inhomogeneous Measurement Noise and Anisotropic Kernels. Scientific Rep. 10, 17663. doi:10.1038/s41598-020-74394-1

Noack, M. M., Zwart, P. H., Ushizima, D. M., Fukuto, M., Yager, K. G., Elbert, K. C., et al. (2021). Gaussian Processes for Autonomous Data Acquisition at Large-Scale Synchrotron and Neutron Facilities. Nat. Rev. Phys. 3 (10), 685–697. doi:10.1038/s42254-021-00345-y

Teixeira Parente, M., and Brandl, G. (2021). BASE: Benchmarking Autonomous Scattering Experiments. Available at: https://jugit.fz-juelich.de/ainx/base.

Teixeira Parente, M., Brandl, G., Franz, C., Schneidewind, A., and Ganeva, M. (2021). Autonomous Experiments for Neutron Three-axis Spectrometers (TAS) with Log-Gaussian Processes. arXiv. arXiv preprint arXiv:2105.07716.

Rohr, B., Stein, H. S., Guevarra, D., Wang, Y., Haber, J. A., Aykol, M., et al. (2020). Benchmarking the Acceleration of Materials Discovery by Sequential Learning. Chem. Sci. 11, 2696–2706. doi:10.1039/C9SC05999G

Schneidewind, A., and Čermák, P. (2015). Cold Three Axes Spectrometer. J. large-scale Res. Facil. JLSRF 1, A12. doi:10.17815/jlsrf-1-35

Shirane, G., Shapiro, S. M., and Tranquada, J. M. (2002). Neutron Scattering with a Triple-axis Spectrometer: Basic Techniques. Cambridge, United Kingdom: Cambridge University Press. doi:10.1017/CBO9780511534881

Sivia, D. S. (2011). Elementary Scattering Theory: For X-ray and Neutron Users. Oxford, United Kingdom: Oxford University Press. doi:10.1093/acprof:oso/9780199228676.001.0001

Keywords: autonomous experiment, data-driven analysis, cost-benefit analysis, performance, three-axes spectrometry, inelastic neutron scattering, materials analysis

Citation: Teixeira Parente M, Schneidewind A, Brandl G, Franz C, Noack M, Boehm M and Ganeva M (2022) Benchmarking Autonomous Scattering Experiments Illustrated on TAS. Front. Mater. 8:772014. doi: 10.3389/fmats.2021.772014

Received: 07 September 2021; Accepted: 22 December 2021;

Published: 08 February 2022.

Edited by:

Mathieu Doucet, Oak Ridge National Laboratory, United StatesReviewed by:

Stephan Haas, University of Southern California, United StatesYao Wang, Clemson University, United States

Vivek Dixit, Clemson University, United States, in collaboration with reviewer YW

Esther Tsai, Brookhaven National Laboratory (DOE), United States

Copyright © 2022 Teixeira Parente, Schneidewind, Brandl, Franz, Noack, Boehm and Ganeva. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mario Teixeira Parente, m.teixeira.parente@fz-juelich.de

Mario Teixeira Parente

Mario Teixeira Parente Astrid Schneidewind

Astrid Schneidewind Georg Brandl1

Georg Brandl1  Christian Franz

Christian Franz Marina Ganeva

Marina Ganeva